S3 Archiving

LogScale supports archiving ingested logs to Amazon S3. The archived logs are then available for further processing in any external system that integrates with S3. The files written by LogScale in this format are not searchable by LogScale — this is an export meant for other systems to consume.

When S3 Archiving is enabled all the events in repository are backfilled into S3 and then it archives new events by running a periodic job inside all LogScale nodes, which looks for new, unarchived segment files. The segment files are read from disk, streamed to an S3 bucket, and marked as archived in LogScale.

An administrator must set up archiving per repository. After selecting a

repository on LogScale, the configuration page is available under

Settings.

Note

For slow-moving datasources it can take some time before segment files are completed on disk and then made available for the archiving job. In the worst case, before a segment file is completed, it must contain a gigabyte of uncompressed data or 30 minutes must have passed. The exact thresholds are those configured as the limits on mini segments.

Important

Until 1.145.0, S3 archiving does not support uploading to S3 buckets where object locking is enabled.

From 1.220.0, LogScale supports archiving to S3 buckets where object locking is enabled.

For more information on segments files and datasources, see segment files and Datasources.

S3 Archive Storage Format and Layout

When uploading a segment file, LogScale creates the S3 object key based on the tags, start date, and repository name of the segment file. The resulting object key makes the archived data browsable through the S3 management console.

LogScale uses the following pattern:

REPOSITORY/TYPE/TAG_KEY_1/TAG_VALUE_1/../TAG_KEY_N/TAG_VALUE_N/YEAR/MONTH/DAY/START_TIME-SEGMENT_ID.gzWhere:

REPOSITORYName of the repository

typeKeyword (static) to identfy the format of the enclosed data.

TAG_KEY_1Name of the tag key (typically the name of parser used to ingest the data, from the #type field)

TAG_VALUEValue of the corresponding tag key.

YEARYear of the timestamp of the events

MONTHMonth of the timestamp of the events

DAYDay of the timestamp of the events

START_TIMEThe start time of the segment, in the format

HH-MM-SSSEGMENT_IDThe unique segment ID of the event data

An example of this layout can be seen in the file list below:

$ s3cmd ls -r s3://logscale2/accesslog/

2023-06-07 08:03 1453 s3://logscale2/accesslog/type/kv/2023/05/02/14-35-52-gy60POKpoe0yYa0zKTAP0o6x.gz

2023-06-07 08:03 373268 s3://logscale2/accesslog/type/kv/humioBackfill/0/2023/03/07/15-09-41-gJ0VFhx2CGlXSYYqSEuBmAx1.gzRead more about Parsing Event Tags.

File Format

LogScale supports two formats for storage: native format and NDJSON.

Native Format

The native format is the raw data, i.e. the equivalent of the @rawstring of the ingested data:

accesslog127.0.0.1 - - [07/Mar/2023:15:09:42 +0000] "GET /falcon-logscale/css-images/176f8f5bd5f02b3abfcf894955d7e919.woff2 HTTP/1.1" 200 15736 "http://localhost:81/falcon-logscale/theme.css" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/110.0.0.0 Safari/537.36" 127.0.0.1 - - [07/Mar/2023:15:09:43 +0000] "GET /falcon-logscale/css-images/alert-octagon.svg HTTP/1.1" 200 416 "http://localhost:81/falcon-logscale/theme.css" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/110.0.0.0 Safari/537.36" 127.0.0.1 - - [09/Mar/2023:14:16:56 +0000] "GET /theme-home.css HTTP/1.1" 200 70699 "http://localhost:81/" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/111.0.0.0 Safari/537.36" 127.0.0.1 - - [09/Mar/2023:14:16:59 +0000] "GET /css-images/help-circle-white.svg HTTP/1.1" 200 358 "http://localhost:81/" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/111.0.0.0 Safari/537.36" 127.0.0.1 - - [09/Mar/2023:14:16:59 +0000] "GET /css-images/logo-white.svg HTTP/1.1" 200 2275 "http://localhost:81/" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/111.0.0.0 Safari/537.36"NDJSON Format

The default archiving format is NDJSON When using NDJSON, the parsed fields will be available along with the raw log line. This incurs some extra storage cost compared to using raw log lines but gives the benefit of ease of use when processing the logs in an external system.

json{"#type":"kv","#repo":"weblog","#humioBackfill":"0","@source":"/var/log/apache2/access_log","@timestamp.nanos":"0","@rawstring":"127.0.0.1 - - [07/Mar/2023:15:09:42 +0000] \"GET /falcon-logscale/css-images/176f8f5bd5f02b3abfcf894955d7e919.woff2 HTTP/1.1\" 200 15736 \"http://localhost:81/falcon-logscale/theme.css\" \"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/110.0.0.0 Safari/537.36\"","@id":"XPcjXSqXywOthZV25sOB1hqZ_0_1_1678201782","@timestamp":1678201782000,"@ingesttimestamp":"1691483483696","@host":"ML-C02FL14GMD6V","@timezone":"Z"} {"#type":"kv","#repo":"weblog","#humioBackfill":"0","@source":"/var/log/apache2/access_log","@timestamp.nanos":"0","@rawstring":"127.0.0.1 - - [07/Mar/2023:15:09:43 +0000] \"GET /falcon-logscale/css-images/alert-octagon.svg HTTP/1.1\" 200 416 \"http://localhost:81/falcon-logscale/theme.css\" \"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/110.0.0.0 Safari/537.36\"","@id":"XPcjXSqXywOthZV25sOB1hqZ_0_3_1678201783","@timestamp":1678201783000,"@ingesttimestamp":"1691483483696","@host":"ML-C02FL14GMD6V","@timezone":"Z"} {"#type":"kv","#repo":"weblog","#humioBackfill":"0","@source":"/var/log/apache2/access_log","@timestamp.nanos":"0","@rawstring":"127.0.0.1 - - [09/Mar/2023:14:16:56 +0000] \"GET /theme-home.css HTTP/1.1\" 200 70699 \"http://localhost:81/\" \"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/111.0.0.0 Safari/537.36\"","@id":"XPcjXSqXywOthZV25sOB1hqZ_0_15_1678371416","@timestamp":1678371416000,"@ingesttimestamp":"1691483483696","@host":"ML-C02FL14GMD6V","@timezone":"Z"} {"#type":"kv","#repo":"weblog","#humioBackfill":"0","@source":"/var/log/apache2/access_log","@timestamp.nanos":"0","@rawstring":"127.0.0.1 - - [09/Mar/2023:14:16:59 +0000] \"GET /css-images/help-circle-white.svg HTTP/1.1\" 200 358 \"http://localhost:81/\" \"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/111.0.0.0 Safari/537.36\"","@id":"XPcjXSqXywOthZV25sOB1hqZ_0_22_1678371419","@timestamp":1678371419000,"@ingesttimestamp":"1691483483696","@host":"ML-C02FL14GMD6V","@timezone":"Z"} {"#type":"kv","#repo":"weblog","#humioBackfill":"0","@source":"/var/log/apache2/access_log","@timestamp.nanos":"0","@rawstring":"127.0.0.1 - - [09/Mar/2023:14:16:59 +0000] \"GET /css-images/logo-white.svg HTTP/1.1\" 200 2275 \"http://localhost:81/\" \"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/111.0.0.0 Safari/537.36\"","@id":"XPcjXSqXywOthZV25sOB1hqZ_0_23_1678371419","@timestamp":1678371419000,"@ingesttimestamp":"1691483483696","@host":"ML-C02FL14GMD6V","@timezone":"Z"}A single NDJSON line is just a JSON object, which formatted looks like this:

json{ "#humioBackfill" : "0", "#repo" : "weblog", "#type" : "kv", "@host" : "ML-C02FL14GMD6V", "@id" : "XPcjXSqXywOthZV25sOB1hqZ_0_1_1678201782", "@ingesttimestamp" : "1691483483696", "@rawstring" : "127.0.0.1 - - [07/Mar/2023:15:09:42 +0000] \"GET /falcon-logscale/css-images/176f8f5bd5f02b3abfcf894955d7e919.woff2 HTTP/1.1\" 200 15736 \"http://localhost:81/falcon-logscale/theme.css\" \"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/110.0.0.0 Safari/537.36\"", "@source" : "/var/log/apache2/access_log", "@timestamp" : 1678201782000, "@timestamp.nanos" : "0", "@timezone" : "Z" }

Each record includes the full detail for each event, including parsed fields, the original raw event string, and tagged field entries.

How Data is Uploaded to S3

Data is uploaded to S3 as soon as a segment file has been created during ingest (for more information, see Ingestion: Digest Phase).

Each segment file is sent as as multipart upload, so the upload of a single file may require multiple S3 requests. The exact number of requests will depend on rate of ingest, but expect a rate of one request for each 8MB of ingested data.

Set up S3 archiving

To archive data to S3, you must do the following steps in order.

S3 Storage Configuration

Important

Contact LogScale Support for assistance to configure your LogScale instance for archiving.

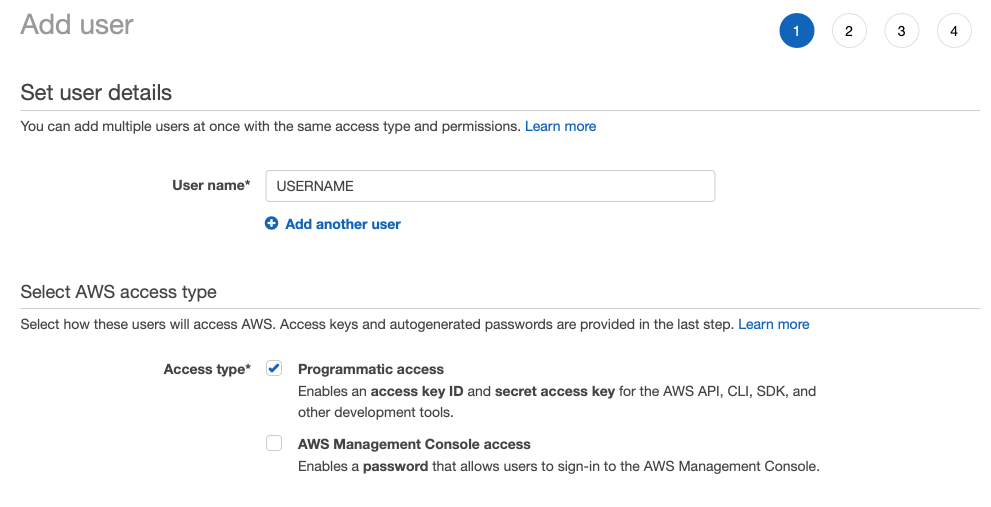

For LogScale, you need an IAM user with write access to the buckets used for archiving. That user must have programmatic access to S3, so when adding a new user through the AWS console make sure programmatic access is checked:

|

Figure 2. Setup

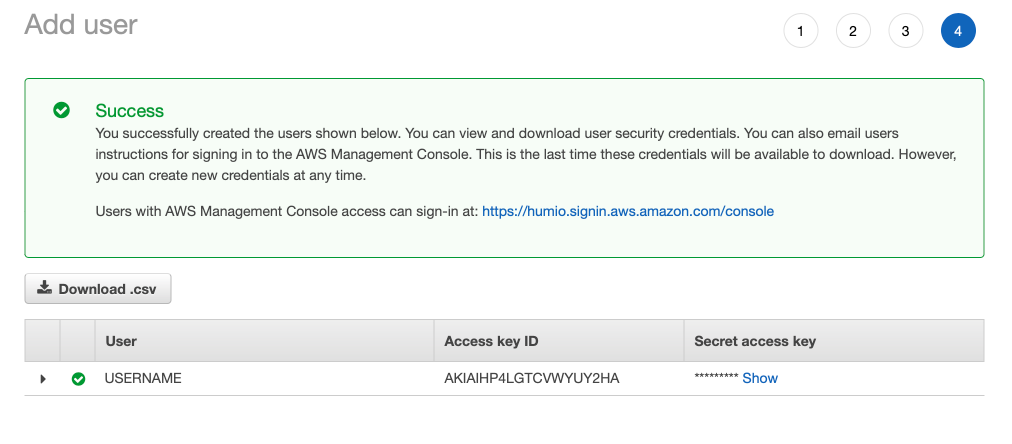

Later in the process, you can retrieve the access key and secret key:

|

Figure 3. Setup Key

Write down this inforomation to give to LogScale Support for your archiving configuration in LogScale.

The keys are used for authenticating the user against the S3 service. For more guidance on how to retrieve S3 access keys, see AWS access keys. For more details on creating a new user, see creating a new user in IAM.

Once you have completed this configuration, choose whether to set up S3 archiving with an IAM role (recommended) or an IAM user.

Setup S3 archiving with IAM role

Note

From version 1.177 it is only possible to perform S3 archiving using roles in AWS. Any previous S3 archiving set up to a user will continue to work as expected, but you cannot create new S3 archiving from repositories in LogScale.

Configuring S3 archiving with the IAM role requires that the role has

PutObject (write) permissions to the

bucket. So in the diagram below, the AWS IAM User has the AWS IAM Role

which has PutObject (write) permissions

for the S3 bucket.

Important

Prior to beginning the steps below, contact LogScale Support to ensure that your LogScale instance is configured for archiving and a repository has been created.

In LogScale:

Open the repository created and click . Click .

In AWS:

Log in to the AWS console and navigate to your S3 service page.

Create a new bucket. Follow the instructions on the AWS docs on naming conventions. In particular, using dashes not periods as a separator, and ensuring you do not repeat dashes and dots.

Navigate to IAM and click Roles.

Create a new role and select Custom trust policy. Copy and paste the trust policy below. You will customize it in the next steps.

JSON{ "Version": "2012-10-17", "Statement": [ { "Sid": "AllowLogScaleS3Archiving", "Effect": "Allow", "Principal": { "AWS": "arn:aws:iam::123456789012:user/LogScaleS3Archiving" }, "Action": "sts:AssumeRole", "Condition": { "StringEquals": { "sts:ExternalId": "2ph55aGUve1rxxLv9B4CFnnb/ItCOD9MHBBsna9KBiFFPahzq" } } } ] }Go to LogScale and in the S3 archiving page for your repository, copy the IAM identity. Switch to AWS and paste it into the AWS field in your trust policy. Do the same for the External ID. Then click in AWS.

Click to skip creating a policy for now.

Enter a name for the role. Click .

Search for the role you created and open it. Click the button and choose .

Switch the policy editor in AWS to use JSON and copy and paste the below into the policy editor. You will customize it in the next steps.

JSON{ "Version": "2012-10-17", "Statement": [ { "Sid": "AllowWriteToBucket", "Effect": "Allow", "Resource": "arn:aws:s3:::MyLogScaleS3ArchivingBucket/*", "Action": "s3:PutObject" } ] }In the pasted text, change the bucket name

MyLogScaleS3ArchivingBucket/*to match the name of the bucket you created in Create new bucket. Be sure that the/*is preserved.Enter a name for the policy and click .

Locate the ARN on the role in AWS and copy it.

Go to LogScale and in the S3 archiving page for your repository, paste the ARN you copied into Role ARN. Enter the bucket name and region. Click .

Troubleshoot S3 archiving configuration

If you encounter an access denied error message when configuring S3 archiving, check your configuration settings for missing information or typos.

Tag Grouping in AWS S3

From version 1.169, if tag grouping is applied for a repository, the archiving logic will upload one segment into one S3 file, even though the tag grouping makes each segment possibly contain multiple unique combinations of tags. The TAG_VALUE part of the S3 file name that corresponds to a tag with tag grouping will not contain any of the specific values for the tag in that segment, but will instead contain an internal value that denotes which tag group the segment belongs to. This is less human readable than splitting out a segment into a number of S3 files corresponding to each unique tag combination in the segment, but avoids the risk of a single segment being split into an unmanageable amount of S3 files.

S3 archived log re-ingestion

You can re-ingest log data that has been written to an S3 bucket through S3 archiving by using Log Collector and the native JSON parsing within LogScale.

This process has the following requirements:

The files need to be downloaded from the S3 bucket to the machine running the Log Collector. The S3 files cannot be accessed natively by the Log Collector.

The ingested events will be ingested into the repository that is created for the purpose of receiving the data.

To re-ingest logs:

Create a repo in LogScale where the ingested data will be stored. See Creating a Repository or View.

Create an ingest token, and choose the JSON parser. See Assigning Parsers to Ingest Tokens.

Install the Falcon LogScale Collector to read from a file using the

.gzextension as the file match. For example, using a configuration similar to this:yaml#dataDirectory is only required in the case of local configurations and must not be used for remote configurations files. dataDirectory: data sources: bucketdata: type: file # Glob patterns include: - /bucketdata/*.gz sink: my_humio_instance parser: json ...For more information, see Configuration Examples.

Copy the log file from the S3 bucket into the configured directory (

/bucketdata) in the above example.

The Log Collector reads the file that has been copied, sends it to LogScale, where the JSON event data will be parsed and recreated.