Testing GCP Deployment

Once deployed, the deployment should be tested:

Sending Data to the Cluster

To send data to the cluster, we will create a new repository, obtain the ingest token, and then configure fluentbit to gather logs from all the pods in our Kubernetes cluster and send them to LogScale.

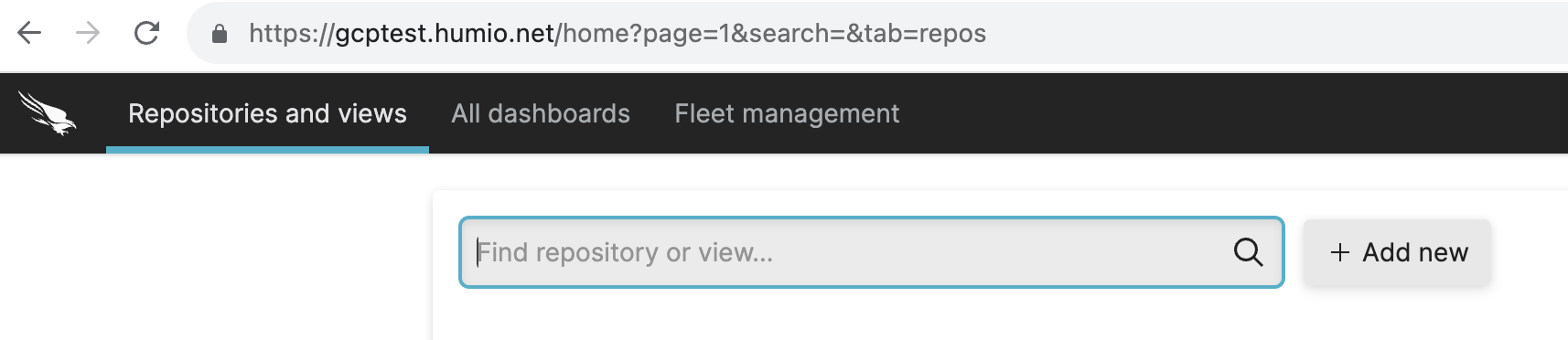

Create a repo using LogScale UI

Click on Add new button and create a new repo

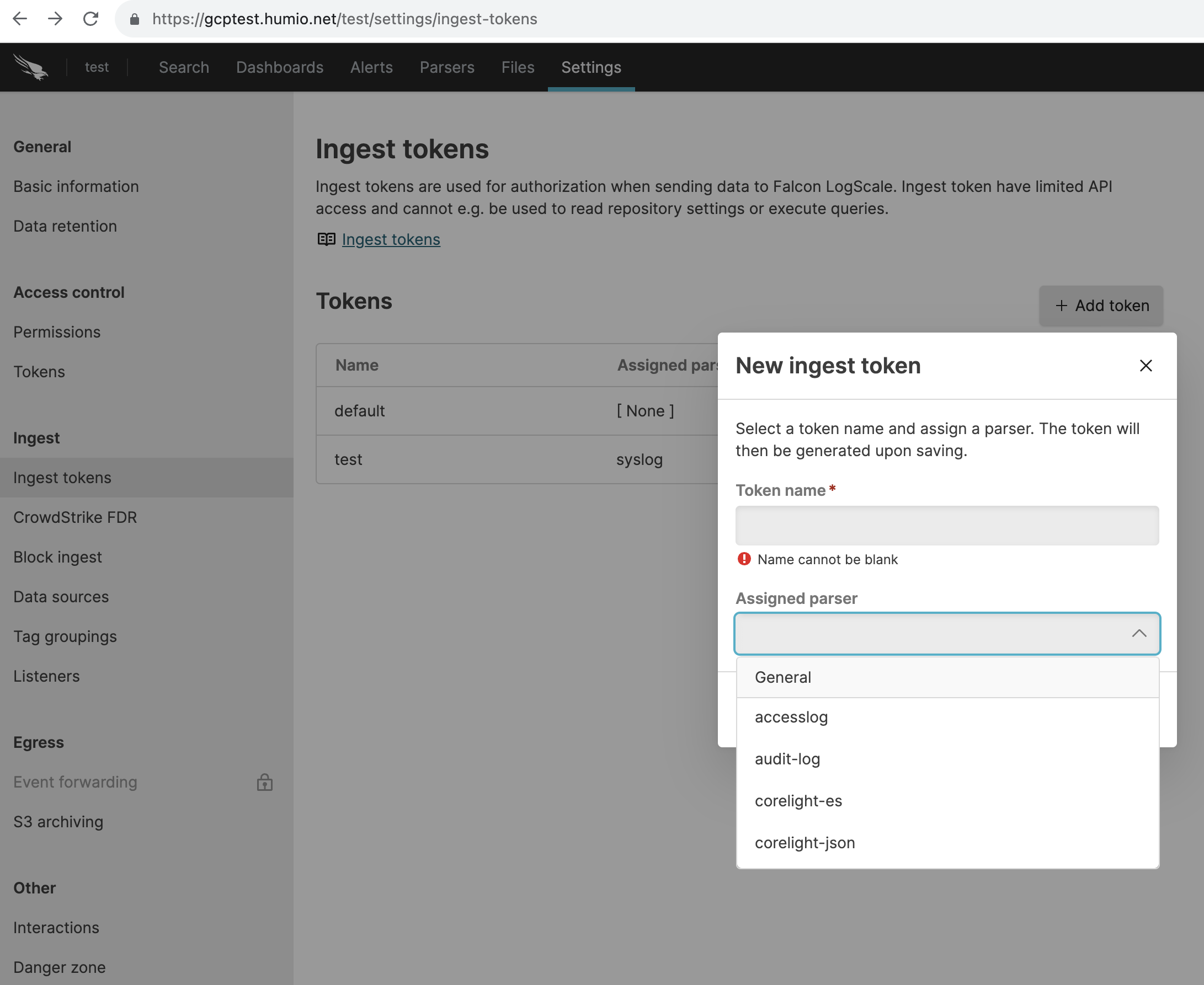

Create an ingest token

Go to the test repo you've created and in the settings tab, select Ingest tokens and create a new Ingest token with any available parsers.

Ingest Logs to the Cluster

LogScale recommends using the Falcon Log Collector for ingesting data. The example below uses fluentbit to perform a simple connectivity test.

Now we'll install fluentbit into the Kubernetes

cluster and configure the endpoint to point to our

$INGRESS_HOSTNAME, and use the

$INGEST_TOKEN that was just created.

$ helm repo add humio https://humio.github.io/humio-helm-charts

$ helm repo update

Using a text editor, create a file named,

humio-agent.yaml and copy the

following lines into it:

humio-fluentbit:

enabled: true

humioHostname: $INGRESS_ES_HOSTNAME

es:

tls: true

port: 443

inputConfig: |-

[INPUT]

Name tail

Path /var/log/containers/*.log

Parser docker

# The path to the DB file must be unique and

# not conflict with another fluentbit running on the same nodes.

DB /var/log/flb_kube.db

Tag kube.*

Refresh_Interval 5

Mem_Buf_Limit 512MB

Skip_Long_Lines On

resources:

limits:

cpu: 100m

memory: 1024Mi

requests:

cpu: 100m

memory: 512MiNow configure this with helm:

$ helm install test humio/humio-helm-charts \ --namespace logging \ --set humio-fluentbit.token=$INGEST_TOKEN \ --values humio-agent.yamlVerify logs are ingested:

Go to the LogScale UI and click on the quickstart-cluster-logs repository

In the search field, enter:

logscale"kubernetes.container_name" = "humio-operator"Verify you can see the Humio Operator logs