Amazon CloudWatch Log Format

LogScale's CloudWatch integration sends your AWS CloudWatch Logs and Metrics to LogScale by using AWS Lambda functions to ship the data. This integration does not support getting current CloudWatch logs into LogScale, but logs created going forward after installation. To get your current logs into LogScale, you would have to download your logs and use a log shipper.

The integration was last updated on January 6th 2023 to the version

v2.0.0. If you have set up the integration

prior to this date, you can update your current integration to the newest

version. See the section concerning

Updating the Integration

for more information.

Quick Installation

You can quickly get up and running using the integration via the Launch Stack buttons provided below to install in a region of your choice.

The quick launch uses LogScale hosted resources. The integration consists

of a CloudFormation template and some Python code files used

for AWS Lambda functions. If you install the integration using the quick

launch buttons, the CloudFormation template will be retrieved

from the region us-east-1, and set up a CloudStack in the

region you have chosen. The Python code files are also stored in the

us-east-1 region, and resources will be created during the

installation to copy these code files into a S3 bucket that will be

created in your chosen region. All these things will happen automatically

when installing the integration.

Due to AWS accounts containing blocks on regions, access might be denied

when using the CloudFormation template, with the

LogScaleCloudWatchCopyZipCustom Lambda failing to run. To

avoid this, allow your AWS account to access the us-east-1

region where the installation files are stored.

Launch Stack

| Region | Link |

|---|---|

| US East (N. Virginia) - US East 1 | Install cloudwatch2logscale in US East 1 |

| US East (Ohio) - US East 2 | Install cloudwatch2logscale in US East 2 |

| US West (N. California) - US West 1 | Install cloudwatch2logscale in US West 1 |

| US West (Oregon) - US West 2 | Install cloudwatch2logscale in US West 2 |

| EU (Frankfurt) - EU Central 1 | Install cloudwatch2logscale in EU Central 1 |

| EU (Ireland) - EU West 1 | Install cloudwatch2logscale in EU West 1 |

| EU (London) - EU West 2 | Install cloudwatch2logscale in EU West 2 |

| EU (Paris) - EU West 3 | Install cloudwatch2logscale in EU West 3 |

| EU (Stockholm) - EU North 1 | Install cloudwatch2logscale in EU North 1 |

| AP (Mumbai) - AP South 1 | Install cloudwatch2logscale in AP South 1 |

| AP (Singapore) - AP Southeast 1 | Install cloudwatch2logscale in AP Southeast 1 |

| AP (Sydney) - AP Southeast 2 | Install cloudwatch2logscale in AP Southeast 2 |

| CA (Central) - CA Central 1 | Install cloudwatch2logscale in CA Central 1 |

If your region is missing, contact us:

Support (

<logscalesupport@crowdstrike.com>)

Launch Parameters

The integration is installed using a CloudFormation

template.

The template supports the following parameters:

LogScale Settings:

LogScaleProtocolThe transport protocol used for delivering log events to LogScale.

HTTPSis default and recommended, butHTTPis possible as well.LogScaleHostThe host you want to ship your LogScale logs to. The default value is

cloud.humio.com.LogScaleIngestTokenThe value of your ingest token for the repository from your LogScale account that you want your logs delivered to. This parameter is required as you cannot ingest log/metric events into LogScale otherwise.

VPC Settings:

EnableVPCForIngesterLambdasUsed to enable an AWS VPC for the ingester lambdas. If used, the parameters for security groups and subnet IDs also need to be specified. The default value is

false.SecurityGroupsSecurity groups used by the VPC to group the ingester lambdas. Only required if you are using a VPC.

SubnetIdsSubnet IDs used by the VPC to deploy the ingester lambdas into. Only required if you are using a VPC.

Lambda Settings:

EnableCloudWatchLogsAutoSubscription— Used to enable automatic subscription to new log groups specified with the subscription prefix parameter when they are created. The default value istrue.CreateCloudTrailForAutoSubscription— If autosubscription to new logs is desired, instead of the needed resources (a CloudTrail and a S3 bucket) being created, now existing resources can be used. To use existing resources set this tofalse.Note

This parameter is only considered if the autosubscription is enabled.

The default value is

true. You can find more information under How the Integration Works .EnableCloudWatchLogsBackfillerAutoRun— Used to enable the backfiller to run automatically once created. This will subscribe the log ingester to all present log groups specified with the subscription prefix parameter.Warning

This setting will delete all current subscriptions to the log groups which will be subscribed. This might mess up any current infrastructure using the subscriptions that you have set up.

The default value is

true.LogScaleCloudWatchLogsSubscriptionPrefix— By adding this filter, the LogScale logs ingester will only subscribe to log groups whose paths start with this prefix. The default value is "", which will subscribe the log ingester to all log groups.CreateCloudWatchMetricIngesterAndMetricStatisticsIngesterLambdas— Choose whether the metric ingester and the metric statistics ingester lambdas should be created. These are not necessary for getting CloudWatch logs into LogScale but are only used for getting CloudWatch metrics into LogScale. The default value isfalse.S3BucketContainingLambdaCodeFiles— The name of the S3 bucket containing the lambda code files used for the integration. Change this if you want to retrieve the code files from your own S3 bucket. The default value islogscale-public-us-east-1, which is the S3 bucket where LogScale is hosting the zip file with the code files.S3KeyOfTheDeploymentPackageContainingLambdaCodeFiles— The name of the S3 key in the S3 bucket containing the lambda code files used for the integration. This is the zip file that theCloudFormationwill look for regarding all the lambda code. Change this if you have named the deployment package containing the code files something other than the default. The default value isv2.0.0_cloudwatch2logscale.zip.LogScaleLambdaLogLevelThe level of logs that should be logged by the functions. Possible values are, from the highest level to the lowest:

DEBUGINFOWARNINGERRORCRITICAL

Default is set to

INFO. The logs are divided into a hierarchy where the higher level will also display the lower level logs, thus setting this option toDEBUGwill enable all logs, whereas setting it toCRITICALwill only enable logs classified as critical. Setting this option is important in relation to adjust additional costs from CloudWatch since enabling any logs higher thanWARNINGwill make the lambda functions log more information and the cost of running the integration will be increased.To avoid rudimentary information regarding the integration, yet still log errors, it is recommended to set this parameter to either

WARNINGorERROR. For more information regarding how the logging works, see this link.LogScaleLambdaLogRetentionNumber of days that the logs generated by CloudWatch regarding the integration's lambda functions will be retained.

The integration uses a set of AWS Lambdas, where some of these might need to be manually enabled before use depending on whether autosubscription and backfilling were enabled. See Configuring the Integration for details on how logs and metrics can be retrieved. However, if backfilling has been enabled, new logs should already be arriving in the LogScale host.

Manual Installation

The integration is available at our GitHub repository.

The following sections describe how to manually install the integration without using the launch buttons.

Pre-Requisites

AWS CLI installed and setup with an AWS account allowed to create a

CloudFormationstack.Python 3x installed.

Setup

Clone the Git repository:

https://github.com/humio/cloudwatch2humioIn the project folder, create a zip file with the contents of the

srcfolder from the repository.On Linux/MacOS, this is done by using the present makefile:

logscalemakeOn Windows, create a folder named target in the project root folder, and copy all files from

srcinto it. Then install dependencies into the target folder usingpip:logscalepip3 install -r requirements.txt -t targetIn the

targetfolder, zip all files intoYOUR-ZIP-FILE.zip. Choose whatever name is relevant to you as you can specify which name theCloudFormationtemplate should look for with the parameterS3KeyOfTheDeploymentPackageContainingLambdaCodeFiles. Then create an AWS S3 bucket using the following command:shellaws s3api create-bucket --bucket YOUR-BUCKET --create-bucket-configuration LocationConstraint=YOUR-REGIONThe name of the S3 bucket is also given to the

CloudFormationtemplate using a parameter, namelyS3BucketContainingLambdaCodeFiles, so you decide whatever you want to name it.Upload the zip file to the AWS S3 bucket:

shellaws s3 cp target/YOUR-ZIP-FILE.zip s3://YOUR-BUCKET/Create a

parameters.jsonfile in the project root folder, and specify theCloudFormationparameters, for example:json[ { "ParameterKey": "LogScaleProtocol", "ParameterValue": "https" }, { "ParameterKey": "LogScaleHost", "ParameterValue": "cloud.humio.com" }, { "ParameterKey": "LogScaleIngestToken", "ParameterValue": "YOUR-SECRET-INGEST-TOKEN" }, { "ParameterKey": "EnableVPCForIngesterLambdas", "ParameterValue": "true" }, { "ParameterKey": "SecurityGroups", "ParameterValue": "" }, { "ParameterKey": "SubnetIds", "ParameterValue": "" }, { "ParameterKey": "EnableCloudWatchLogsAutoSubscription", "ParameterValue": "true" }, { "ParameterKey": "CreateCloudTrailForAutoSubscription", "ParameterValue": "true" }, { "ParameterKey": "EnableCloudWatchLogsBackfillerAutoRun", "ParameterValue": "true" }, { "ParameterKey": "LogScaleCloudWatchLogsSubscriptionPrefix", "ParameterValue": "logscale" }, { "ParameterKey": "CreateCloudWatchMetricIngesterAndMetricStatisticsIngesterLambdas", "ParameterValue": "false" }, { "ParameterKey": "S3BucketContainingLambdaCodeFiles", "ParameterValue": "YOUR-BUCKET" }, { "ParameterKey": "S3KeyOfTheDeploymentPackageContainingLambdaCodeFiles", "ParameterValue": "YOUR-ZIP-FILE.zip" }, { "ParameterKey": "LogScaleLambdaLogLevel", "ParameterValue": "WARNING" }, { "ParameterKey": "LogScaleLambdaLogRetention", "ParameterValue": "1" } ]Only the

LogScaleIngestTokenparameter is required to be specified in theparameters.jsonfile as the rest have default values.Create the stack using the

CloudFormationfile and the parameters that you have defined:shellaws cloudformation create-stack --stack-name YOUR-DESIRED-STACK-NAME --template-body file://cloudformation.json --parameters file://parameters.json --capabilities CAPABILITY_IAM --region YOUR-REGIONUpdate the stack. To do so, add your changes and use the command:

shellaws cloudformation update-stack --stack-name YOUR-DESIRED-STACK-NAME --template-body file://cloudformation.json --parameters file://parameters.json --capabilities CAPABILITY_IAM --region YOUR-REGIONThe stack will only register changes in the

CloudFormationfile or in theparameters.jsonfile. If you have updated the lambda files, you need to change the name of the zip file for your changes to be recognized.To delete the stack, use the following command:

shellaws cloudformation delete-stack --stack-name YOUR-DESIRED-STACK-NAMEWarning

Make sure that all the S3 buckets created using the

CloudFormationtemplate are empty before you try to delete. Otherwise, you will probably get an error.

Configuring the Integration

The integration might need to be manually activated before logs and

metrics are shipped to LogScale. This is if you set both the

EnableCloudWatchLogsAutoSubscription and

EnableCloudWatchLogsBackfillerAutoRun to

false. These two parameters are used for

automatically subscribing the integration to existing logs groups (the

backfiller) or new log groups (the autosubscriber) with logs that should

be shipped to LogScale.

Retrieving CloudWatch Logs

The integration uses three lambdas to send CloudWatch logs to Logscale.

LogScaleCloudWatchLogsIngesterLogScaleCloudWatchLogsSubscriberLogScaleCloudWatchBackfiller

If you only want specific log groups to be ingested into LogScale, you

can use the

LogScaleCloudWatchLogsSubscriber lambda

function manually and only subscribe the

LogScaleCloudWatchLogsIngester lambda to

one log group at a time.

If you want to subscribe to all log groups available, you can use the

LogScaleCloudWatchBackfiller. If you

have set the

EnableCloudWatchLogsBackfillerAutoRun

parameter to true when creating the

CloudStack, then you will not have to manually trigger it as it should

already have run on creation and subscribed the

LogScaleCloudWatchLogsIngester lambda to

all targeted log groups.

Otherwise, both lambdas need to be enabled using test events. Remember,

that the backfiller and autosubscription uses the parameter

LogScaleCloudWatchLogsSubscriptionPrefix

to determine which log groups to target.

For the LogScaleCloudWatchLogsSubscriber

lambda, configure your test event like the example below with "EXAMPLE"

representing an actual log group, and click

.

{

"detail": { "requestParameters": { "logGroupName":"EXAMPLE" }}

}

For the LogScaleCloudWatchLogsBackfiller

lambda, use the default test event and click

. This might take a while

depending on the number of log groups that you are subscribing to.

Retrieving CloudWatch Metrics

For retrieving CloudWatch Metrics, the integration can use either one of the two lambda functions:

LogScaleCloudWatchMetricIngesterLogScaleCloudWatchMetricStatisticsIngester

Both lambdas can essentially retrieve the same information, but there are some differences in their limitations and cost .

The two lambda functions will only be created if the parameter

CreateCloudWatchMetricIngesterAndMetricStatisticsIngesterLambdas

is set to true.

There are already defined request parameters for both of these lambda functions; click the button for either using the default test parameters: this will make a request retrieving metrics regarding the number of AWS Lambda invocations made. The default settings of the request only looks at the metrics from the last 15 minutes.

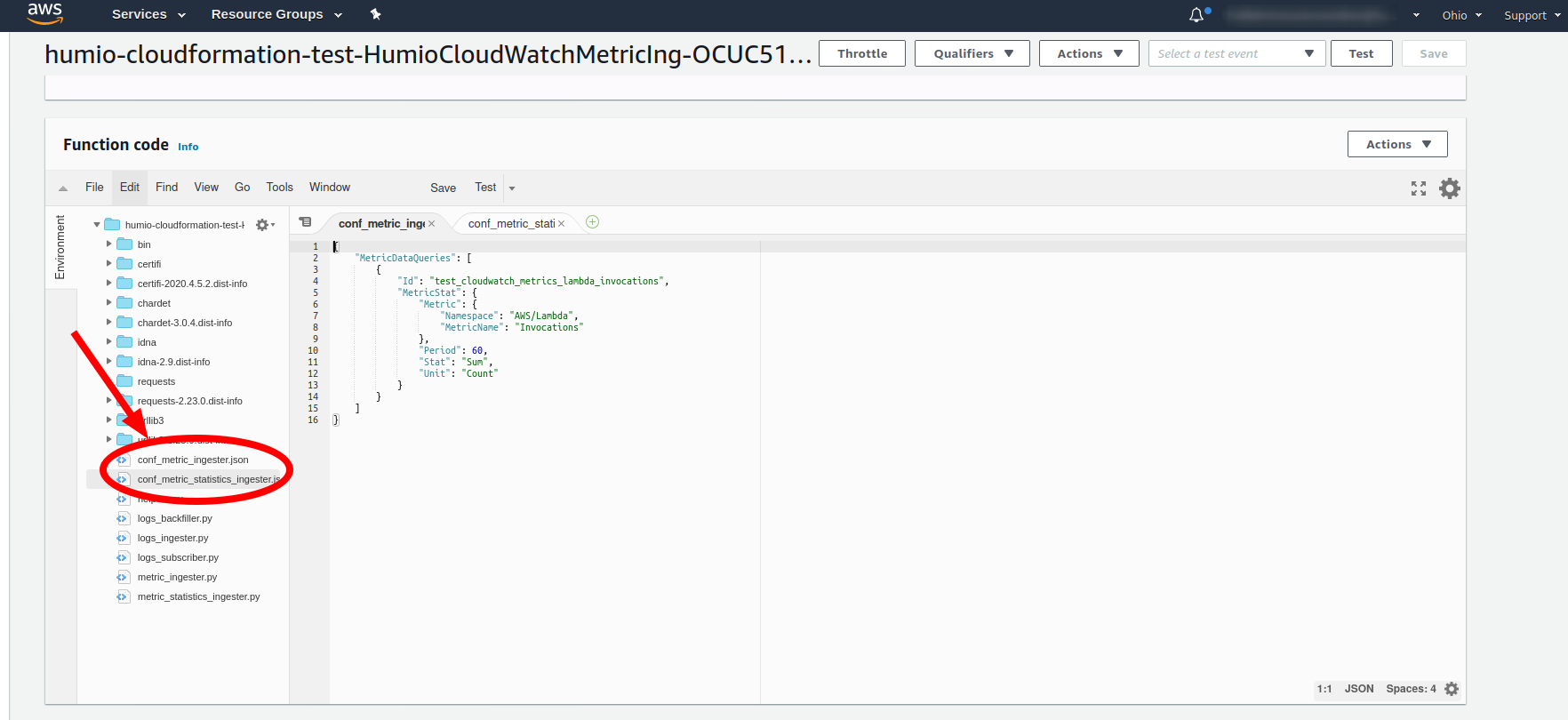

To change the API request parameters, you can edit the

conf_metric_ingester.json file for the

LogScaleCloudWatchMetricIngester lambda,

and the conf_metric_statistics_ingester.json file

for the

LogScaleCloudWatchMetricStatisticsIngester

lambda.

These can be found in your Lambda Console for each lambda under the Function code section.

|

Figure 82. Configuration File

Change this code accordingly to which metrics you want to retrieve. For more information regarding the different options available, consult the AWS documentation regarding metrics, and for the specifics regarding the requests, consult the Boto3 Docs for either the get_metric_data(**kwargs) function, or the get_metric_statistics(**kwargs) function.

Updating the Integration

When the codebase for the integration is updated, you can update your current integration setup as well, but if you do not have issues, it probably isn't necessary.

To update the cloudwatch2logscale integration go to Services → CloudFormation, find the cloudwatch2logscale stack and click → . Use the following link:

The linked CloudFormation template is the newest version, and

should already use the most recent version of the deployment package for

the

S3KeyOfTheDeploymentPackageContainingLambdaCodeFiles

parameter. If this parameter value is the same as the one you already use,

then updating the integration will not change anything regarding the

lambda functions' code files, and might actually not do anything unless

there are new things specified in the CloudFormation

template.

Deleting the Integration

To delete the cloudwatch2logscale integration, you need to find its CloudStack in Services → CloudFormation, and click its button.

The most common error that happens when trying to delete the stack is that one of the two S3 bucket created during setup is not empty. Most often it will be, the S3 bucket associated with autosubscription used by the CloudTrail.

Note

This S3 bucket is only created if the parameter

EnableCloudWatchLogsAutoSubscription was

set to true.

As this bucket gathers events continuously, it tends not to be empty, and

CloudFormation is not allowed to delete a bucket which is not

empty. The other S3 bucket, which is created to contain the copied zip

file containing the code files for the lambda functions, should be able to

be deleted by the integration automatically.

To fix this error if it arises go to your view of S3 buckets, Services → S3, and make sure that either bucket is empty and try to delete the CloudStack again. The S3 bucket used regarding autosubscription is automatically updated with CloudTrail logs, so you have to make sure you empty the bucket right before deleting the CloudStack.

How the Integration Works

The integration consists of a CloudFormation template and

some Python code files zipped into a deployment package. These two

resources are hosted in the S3 bucket

logscale-public-us-east-1 or the files can

be found in the GitHub repository. Using the CloudFormation

template will create resources in your AWS environment depending on which

parameters are set.

There can be created the following resources in total:

6 lambda functions with 2 related permissions and 6 log group specifications

2 custom resources

2 S3 buckets and 1 S3 bucket policy

1 role

1 rule

1 CloudTrail

However, all these resources are not necessarily created.

The integration always installs at a minimum the following resources:

LogScaleCloudWatchLogsIngester— An AWS Lambda which handles sending logs to LogScale. It does this by being subscribed to one or more log groups, and whenever a new log is created in a log group, the log will be pushed to this lambda function which will do some data processing before sending it on to LogScale.LogScaleCloudWatchLogsIngesterPermission— An AWS Lambda Permission which allows the 'logs.amazonaws.com' service to invoke theLogScaleCloudWatchLogsIngesterlambda function. This lambda function is invoked when the subscribed log groups pushes new logs to it.LogScaleCloudWatchLogsSubscriber— An AWS Lambda which is used when manually subscribing theLogScaleCloudWatchLogsIngesterto a log group, or whenEnableCloudWatchLogsAutoSubscriptionis set totrue. Then it will automatically subscribe the ingester lambda function to new log groups based on theLogScaleCloudWatchLogsSubscriptionPrefixparameter. If autosubscription is used however additional resources will be created.LogScaleCloudWatchLogsBackfiller— An AWS Lambda which is used for subscribing theLogscaleCloudWatchLogsIngesterto existing log groups based on the prefix parameterLogScaleCloudWatchLogsSubscriptionPrefix.This lambda function is triggered automatically when the CloudStack is created if the parameter

EnableCloudWatchLogsBackfillerAutoRunis set totrue, however, this will also create the AWS Custom ResourceLogScaleBackfillerAutoRunner, which triggers the function. Otherwise, this backfiller needs to be triggered manually.LogScaleCloudWatchCopyZipLambda— An AWS Lambda which is used for copying the source files containing the code for the other lambda functions into the S3 bucketLogScaleCloudWatchLambdaZipBucket, which will be created in the region where the CloudStack is located. The CloudStack cannot retrieve files from S3 buckets located in other regions, which is why this is necessary.LogScaleCloudWatchLambdaZipBucket— An AWS S3 Bucket which the lambda source files will be copied to from the S3 bucket logscale-public-us-east-1.LogScaleCloudWatchCopyZipCustom— An AWS Custom Resource used for triggering the lambda functionLogScaleCloudWatchCopyZipLambdaduring the creation of the CloudStack.LogScaleCloudWatchRole— An AWS IAM Role given to all of the lambda functions to allow them to perform actions on other resources.

The following additional resources can be created depending on how the

CloudFormation parameters are set:

LogScaleBackfillerAutoRunner— An AWS Custom Resource which is created when theEnableCloudWatchLogsBackfillerAutoRunparameter is set totrue. This resource will trigger theLogScaleCloudWatchLogsBackfillerlambda function when it is created, which then will subscribe theLogscaleCloudWatchLogsIngesterlambda function to all log groups with the prefix specified with theLogScaleCloudWatchLogsSubscriptionPrefixparameter.LogScaleCloudWatchLogsSubscriberCloudTrail— An AWS CloudTrail which is used to store log events, so that it is possible to detect whether a new 'CreateLogGroup' event has occurred. This is part of the autosubscription feature, and this trail is used to help trigger theLogScaleCloudWatchLogsSubscriberautomatically. This trail is only created if parameterEnableCloudWatchLogsAutoSubscriptionis set totrue(to enable the creation of autosubscription resources), and parameterCreateCloudTrailForAutoSubscriptionis set totrueas well (to specifically also create this trail and a S3 bucket needed by the it). An existing trail can also be used for this flow which is why the latter parameter exists. You can only have one free CloudTrail per account, so if there is an existing one it is probably preferred to use that one as it will save some cost. If you use an existing trail make sure that it logs the event type 'management events'.LogScaleCloudWatchLogsSubscriberS3Bucket— An AWS S3 Bucket used byLogScaleCloudWatchLogsSubscriberCloudTrailas it needs somewhere to store its logs. This resource is only created if parameterEnableCloudWatchLogsAutoSubscriptionis set totrue(to enable the creation of autosubscription resources), and parameterCreateCloudTrailForAutoSubscriptionis set totrueas well (to specifically also create the trail and this S3 bucket needed by the trail).LogScaleCloudWatchLogsSubscriberS3BucketPolicy— An AWS S3 Bucket Policy which allowsLogScaleCloudWatchLogsSubscriberCloudTrailto put objects into theLogScaleCloudWatchLogsSubscriberS3Bucketbucket. This resource is only created if parameterEnableCloudWatchLogsAutoSubscriptionis set totrue(to enable the creation of autosubscription resources), and parameterCreateCloudTrailForAutoSubscriptionis set totrueas well (to specifically also create the trail and this S3 bucket needed by the trail).LogScaleCloudWatchLogsSubscriberEventRule— An AWS Events Rule which detects the event 'CreateLogGroup' logged by a CloudTrail and triggers theLogScaleCloudWatchLogsSubscriberlambda function which will determine whether it needs to subscribe to the log group based on whether it matches theLogScaleCloudWatchLogsSubscriptionPrefixparameter. This rule is only created if parameterEnableCloudWatchLogsAutoSubscriptionis set totrue.LogScaleCloudWatchLogsSubscriberPermission— An AWS Lambda Permission which allows the resourceLogScaleCloudWatchLogsSubscriberEventRulefrom the 'events.amazonaws.com' service to invoke theLogScaleCloudWatchLogsSubscriberlambda function. This permission will only be created if the parameterEnableCloudWatchLogsAutoSubscriptionis set totrue.LogScaleCloudWatchMetricIngester— An AWS Lambda function which can deliver CloudWatch Metrics to LogSacle. The GetMetricData action from the CloudWatch API reference is used by this function. This lambda will only be created if theCreateCloudWatchMetricIngesterAndMetricStatisticsIngesterLambdasparameter is set totrue. There is not yet any automation in the integration regarding sending these metrics, and this lambda function will have to be triggered manually.LogScaleCloudWatchMetricStatisticsIngester— An AWS Lambda function which can deliver CloudWatch Metrics to LogSacle. The GetMetricStatistics action from the CloudWatch API reference is used by this function. This lambda will only be created if theCreateCloudWatchMetricIngesterAndMetricStatisticsIngesterLambdasparameter is set totrue. There is not yet any automation in the integration regarding sending these metrics, and this lambda function will have to be triggered manually.

Furthermore, there are also created log groups for each of the lambda

functions. These log groups are specified in the

CloudFormation template so the parameters for

LogScaleLambdaLogRetention and

LogScaleLambdaLogLevel are taken into

consideration.

The LogScaleLambdaLogRetention parameter determines

how many days the logs for the integration's lambda functions are stored

in CloudWatch.

The LogScaleLambdaLogLevel parameter determines

which logs from the lambda functions are logged in CloudWatch. The

parameter may be set to one of the following log-levels:

DEBUG— Used in relation to diagnosing problems.INFO— Used in relation to expected behavior.WARNING— Used in relation to unexpected behavior.ERROR— Used in relation to errors.CRITICAL— Used in relation to serious errors. No logs in the integration are considered critical, so selecting this option will disable all logs created by code from the lambda functions.

See the python docs for more information regarding the logging library used by the lambda functions.