Basic Security and Resource Configuration

The following example provides the configuration for a basic cluster on AWS using ephemeral disks and bucket storage. Access to S3 is handled using IRSA (IAM roles for service accounts) and SSO is handled through a Google Workspace SAML integration (link). This example assumes IRSA and the Google Workspace are configured and can be provided in the configuration below.

Before the cluster is created the operator must be deployed to the Kubernetes cluster and three secrets created. For information regarding the installation of the operator please refer to the Install Humio Operator on Kubernetes.

Prerequisite secrets:

Bucket storage encryption key — Used for encrypting and decrypting all files stored using bucket storage.

SAML IDP certificate — Used to verify integrity during SAML SSO logins.

LogScale license key — Installed by the humio-operator during cluster creation. To update the license key for a LogScale cluster managed by humio-operator, this Kubernetes secret must be updated to the new license key. If updates to the license key is performed within the LogScale UI, it will be reverted to the license in this Kubernetes secret.

In practice it looks like this:

kubectl create secret --namespace example-clusters generic \

basic-cluster-1-bucket-storage --from-literal=encryption-key=$(openssl rand -base64 64)

kubectl create secret --namespace example-clusters generic \

basic-cluster-1-idp-certificate --from-file=idp-certificate.pem=./my-idp-certificate.pem

kubectl create secret --namespace example-clusters generic \

basic-cluster-1-license --from-literal=data=licenseStringOnce the secrets are created the following cluster specification can be applied to the cluster, for details on applying the specification see the operator resources Creating the Resource.

apiVersion: core.humio.com/v1alpha1

kind: HumioCluster

metadata:

name: basic-cluster-1

namespace: example-clusters

spec:

license:

secretKeyRef:

name: basic-cluster-1-license

key: data

image: "humio/humio-core:1.82.0"

targetReplicationFactor: 2

nodeCount: 3

storagePartitionsCount: 720

digestPartitionsCount: 720

resources:

limits:

memory: 128Gi

cpu: 31

requests:

memory: 128Gi

cpu: 31

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes_worker_node_group

operator: In

values:

- humio-workers

- key: kubernetes.io/arch

operator: In

values:

- amd64

- key: kubernetes.io/os

operator: In

values:

- linux

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app.kubernetes.io/name

operator: In

values:

- humio

topologyKey: kubernetes.io/hostname

dataVolumeSource:

hostPath:

path: "/mnt/disks/vol1"

type: "Directory"

humioServiceAccountAnnotations:

eks.amazonaws.com/role-arn: "arn:aws:iam::111111111111:role/SomeRoleWithDesiredS3Access"

environmentVariables:

- name: S3_STORAGE_BUCKET

value: "basic-cluster-1-storage"

- name: S3_STORAGE_REGION

value: "us-west-2"

- name: S3_STORAGE_ENCRYPTION_KEY

valueFrom:

secretKeyRef:

name: basic-cluster-1-bucket-storage

key: encryption-key

- name: USING_EPHEMERAL_DISKS

value: "true"

- name: S3_STORAGE_PREFERRED_COPY_SOURCE

value: "true"

- name: KAFKA_SERVERS

value: "kafka-basic-cluster-1-bootstrap:9092"

- name: AUTHENTICATION_METHOD

value: saml

- name: AUTO_CREATE_USER_ON_SUCCESSFUL_LOGIN

value: "true"

- name: PUBLIC_URL

value: https://basic-cluster-1.logscale.local

- name: SAML_IDP_SIGN_ON_URL

value: https://accounts.google.com/o/saml2/idp?idpid=idptoken

- name: SAML_IDP_ENTITY_ID

value: https://accounts.google.com/o/saml2/idp?idpid=idptoken

- name: INGEST_QUEUE_INITIAL_REPLICATION_FACTOR

value: "3"

Once applied the HumioCluster

resource is created along with many other resources, some of which

depend on cert-manager. In

the basic cluster example a single node pool is created with three pods

performing all tasks.

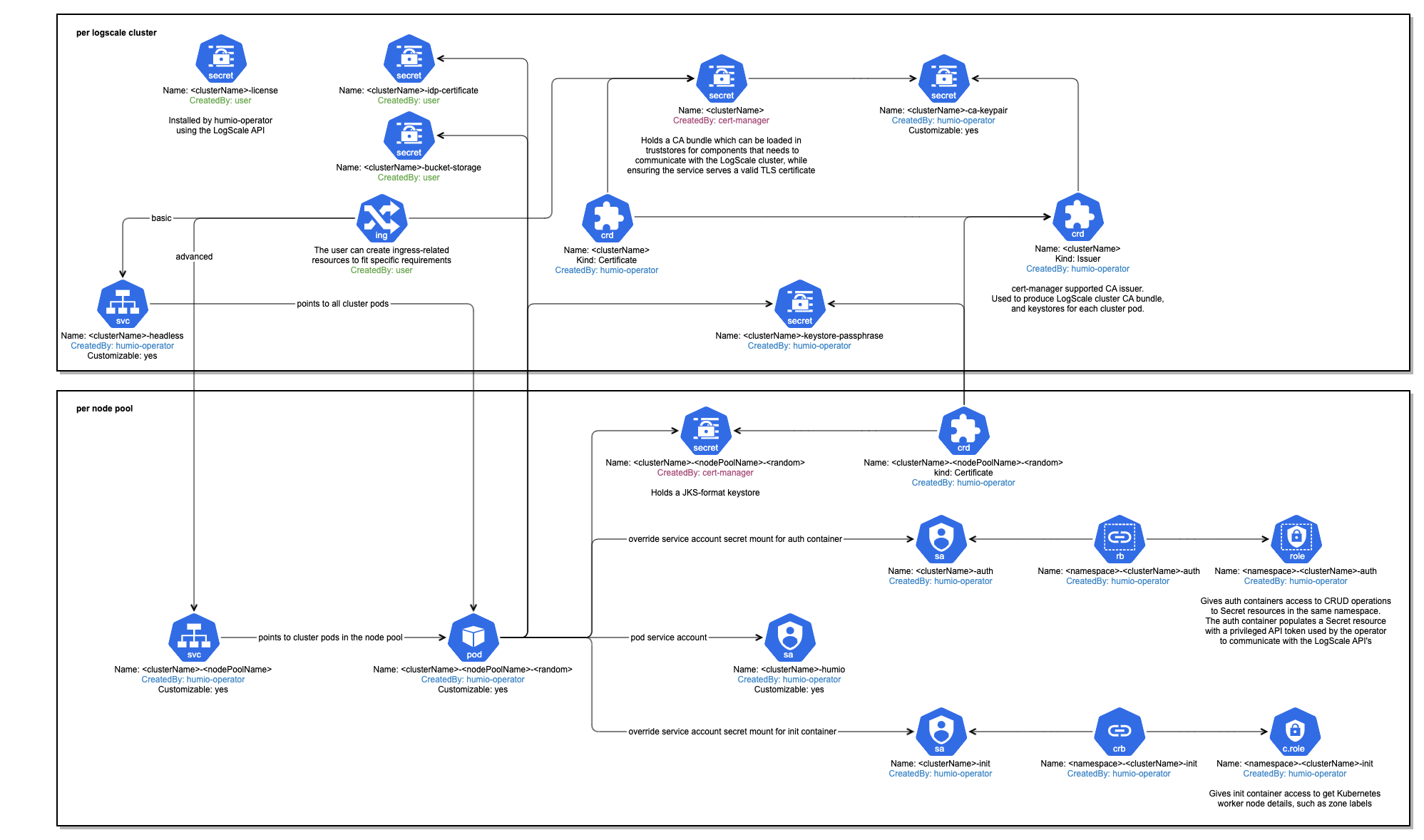

The overall structure of the Kubernetes resources within a LogScale deployment looks like this:

|

Figure 14. Kubernetes Installation Cluster Definition

Any configuration setting for LogScale can be used in the cluster specification. For additional configuration options please see the Configuration Variables.